Written by Jen Selby and Carl Shan

Introduction

What is Machine Learning?

Machine learning is a collection of techniques for learning from examples (many, many examples). The idea is that rather than having humans come up with the rules ("if this, then that, unless this other thing is true, in which case..."), we let the computer figure out the relationships in our data.

Machine Learning Categories

There are many different lists classifying common machine learning tasks. Here is one such list:

Types of Tasks

- Classification: Which group/type does each data point belong to? Typically, there are a finite number of groups or labels that are known ahead of time.

- Clustering: Which data points are related to one another? Often, you specify how many groups you want the algorithm to find, but it otherwise makes the groups on its own.

- Regression: Predicts a numerical value for a given data point, given other information about the data point. Another way to think about it is that it learns the parameters of an equation that gives the relationship between certain variables in the data.

- Translation: Transforms one set of symbols into an equivalent set of symbols of a different type. For example, it could be translating between different foreign languages or translating word problems into mathematical equations.

- Anomaly Detection: Which data points are out of the norm?

- Generation: Given examples of good output, produce similar but novel output.

Types of Learning

- Supervised learning is trained on datasets that include an output that the machine should be generating.

- Unsupervised learning has no pre-specified answers. The goal is to organize or represent the data in some new way.

- Semi-supervised learning uses both samples that have desired outputs (also called labels) as well as unlabeled data.

Linear Regression

Ordinary Least Squares Linear Regression (OLS) is a tool for predicting a continuous numerical output from numerical inputs. It's one of the foundational tools in statistical modeling. It's used to make predictions on new data, but it's also used to estimate the "importance" of certain variables in predicting the outcome.

You have probably seen examples of lines before. They have the equation \(y = mx + b\) where \(m\) is the slope and \(b\) is the intercept of the line.

The goal of a linear regression model is to find the best model parameters (meaning the best values for \(m\) and \(b\)) to get a line that fits the datapoints.

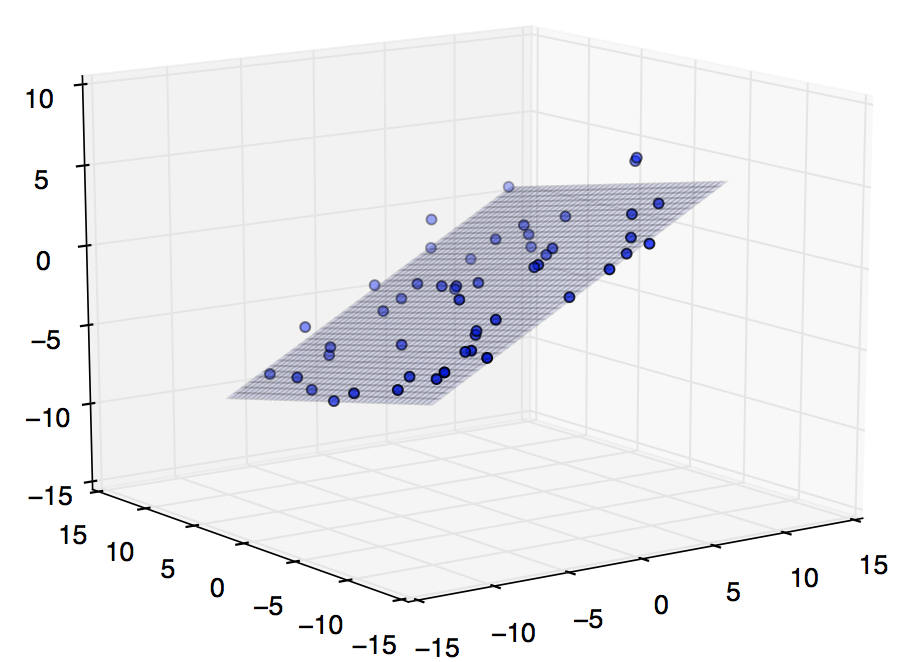

If you have more than one feature in your data, that means more than one \(m\). With two features, you are trying to find a plane that fits the datapoints. As you add more features (I've worked with models that had about 40,000!), you are then trying to find the best surface for your datapoints.

As an example, you might be looking to predict the age of a tree based on two features: its height and its circumference. Linear regression may work well for this, because all of the inputs are numbers and the output is a number as well. It is fine to predict that a tree is 5.27 years old.

In contrast, linear regression would not work for predicting the type of tree, because the output is not numeric. Even if you assigned numbers to each type of tree, the output would not be continuous: what would it mean to predict 5.27, a quarter of the way between elm and spruce? This would be called a classification problem rather than a regression.

Training (fitting) your model

So how do you find the "best" parameters of a model? What does it mean to be "best" anyway?

The Linear Regression model is constructed (or trained) by giving it sample inputs and the corresponding outputs. The outputs are called the labels of the data. In the tree example above, we would have the data for a bunch of trees, with each input having two numbers, one for the height and one for the circumference, and a matching output that gives the known age of the tree.

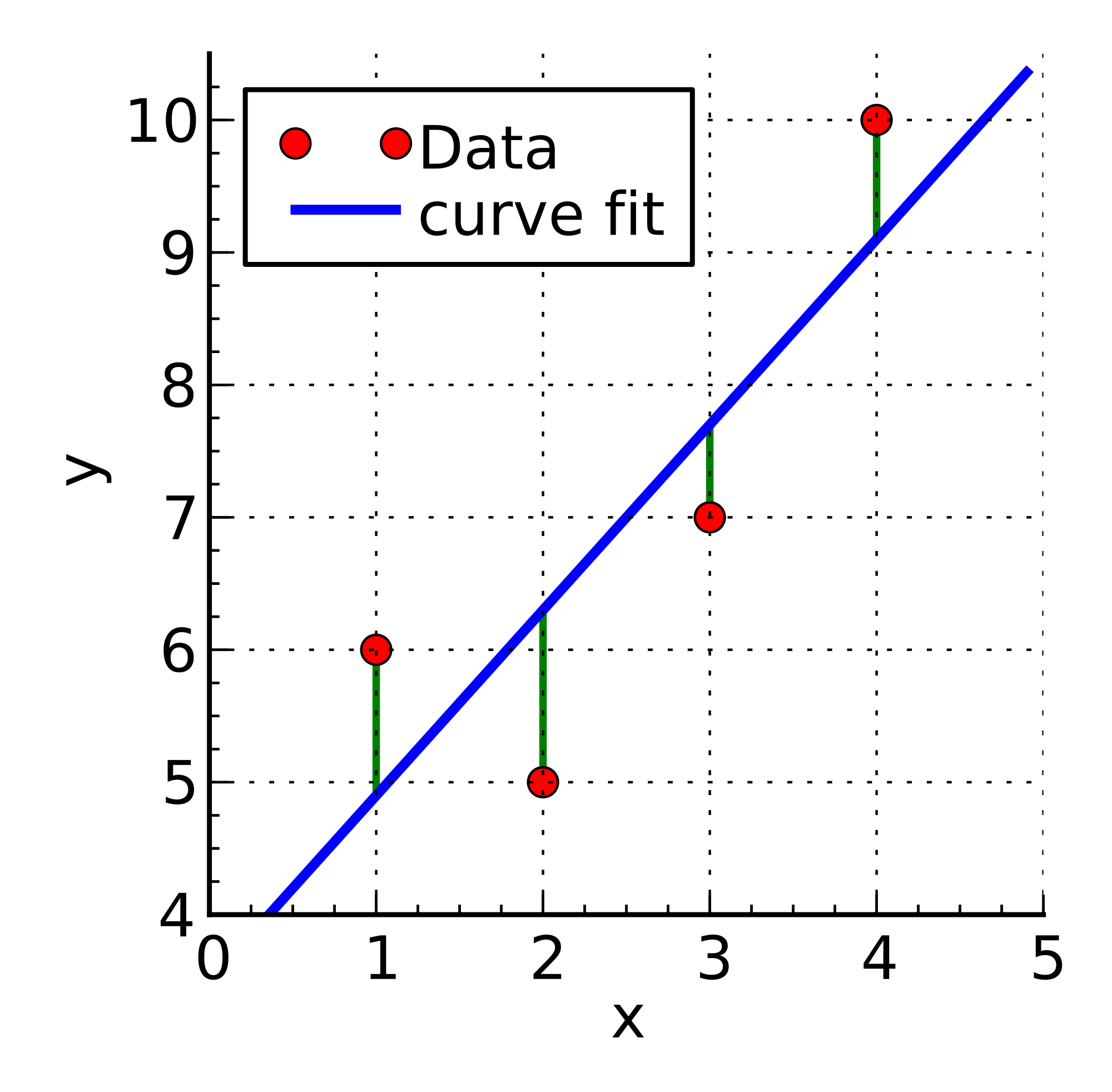

The linear regression training algorithm finds weights for each feature of the input so as to minimize the sum of the squared errors between the predicted outputs and the desired outputs. This gives a best-fit surface through the data. In this case, the sum of squared errors is the function that you are trying to minimize when you estimate the linear model. The squared error for each input datapoint is $$squaredError = (predictedValue - actualValue)^2$$

Linear Regression will attempt to minimize the sum of all of the \(squaredErrors\) by calculating the error for each datapoint and then summing them.

The prediction for any particular input will be the sum of all of the values for each feature multipled by the weight for that feature, or $$predictedValue = intercept + sum(featureVal_1 * featureWeight_1 + featureVal_2 * featureWeight_2...)$$

In other words, for a model with one feature, you are trying to find the parameters \(m\) and \(b\) so that the total error is minimized. You want to find a line that minimizes the sum of the squared distances between each point and the line.

The function you are minimizing is known as the cost function or loss function. You can watch this Khan Academy video if you would like some more details about linear regression and the idea of squared errors.

Training a linear regression model involves linear algebra and calculus, which most of you have not seen, but if you would like the details, see section 5.1.4 of this book or read this online presentation.

Prediction

Armed with these trained weights, we can now look at a new input set, multiply each part of the input by its corresponding weight, and get a predicted output. For instance, for the data set shown in the graph below, if my new input is x=4, we're going to guess that y will be 9.2.

If we have two inputs instead of just one, then we get something that looks like the graph below; we are finding a best fit plane instead of a line. Now, we give an x1 and x2 in order to predict a y. We can continue adding as many inputs per data point as we want, increasing the dimensionality. It becomes a lot harder to visualize, but the math stays the same.

At the end of the training in our tree example, we would have two weights, one for height and one for circumference, and an intercept constant. If we had the measurements for some tree, we would multiply each measurement by its weight and then add that together with the intercept to get the model's prediction for the age of that tree.

Limitations

One big limitation of linear regression is that it assumes that there is a linear relationship between the inputs and the output. If this assumption is violated but you try to build a linear model anyway, it can lead to some very odd conclusions, as illustrated by the infamous Anscombe's Quartet -- all of the datasets below have the exact same best-fit line.

Example Code

You can find a basic example of using the scikit-learn library at http://scikit-learn.org/stable/modules/linear_model.html#ordinary-least-squares and full documentation at http://scikit-learn.org/stable/modules/generated/sklearn.linear_model.LinearRegression.html

Exercises

Our Linear Regression Jupyter Notebook uses the scikit-learn Python library's linear model module to perform the regression and includes questions and suggested exercises. See the Setup and Tools section to get your laptop set up to run Jupyter notebooks with the required libraries.

Regularization: Ridge, LASSO, and Elastic-Net

When we use ordinary least squares regression (OLS), sometimes we have too many inputs or our estimates of the parameters in our linear model are too large, because we are overfitting to some of the specific examples in our training data. A lot of the inputs may be closely correlated as well, which leads to a number of problems.

This is a feature selection problem, meaning that what we want to know is what features or parts of our data are the most important ones for our model. Techniques for algorithmically picking picking "good inputs" are known as regularization.

LASSO and Ridge are both techniques used in linear modeling to select the subset of inputs that are most helpful in predicting the output. (LASSO stands for Least Absolute Shrinkage and Selection Operator.)

Ridge and LASSO have the following in common: they enhance the cost function in OLS with a small addendum -- they add a penalization term to the cost function that pushes the model away from large parameter estimates and from having many parameters with non-zero values.

The specific way that this penalization occurs is slightly different between Ridge and LASSO. In Ridge, you change the cost function so that it also takes into account the sum of the squares of each weight, also known as the L2 norm of the parameter vector. The boundary drawn is a circle, and you want to be on the "ridge" of the circle when minimizing your cost function. In LASSO, you penalize with the sum of absolute values of the weights, known as the L1 norm. The boundary is a square.

You can learn a lot more about Ridge Regression through this 16-minute video.

The penalization term will look like a Lagrange multiplier (if you've seen that before). It'll be <some penalization value you choose>* (the L2 norm of the estimate of your parameters).

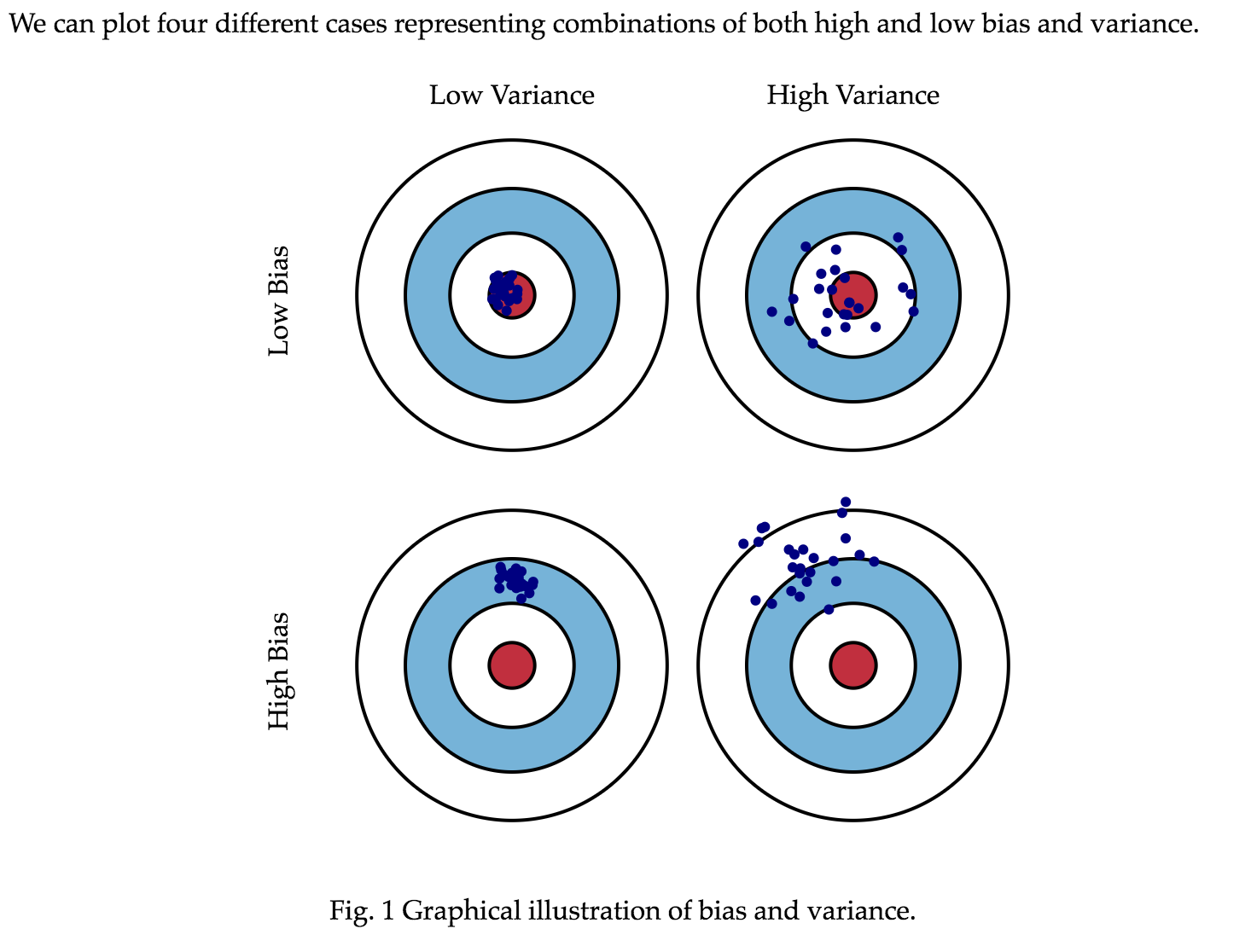

While Ridge regression will decrease the variance of your the estimates of your model parameters, it will no longer be unbiased, because we are deliberately asking it to choose parameter values that do not fit the exact dataset as well, in the hopes of having it transfer more easily to new data in the future. The bias-variance tradeoff is something that we will discuss in future classes.

LASSO will add inputs in one at a time, and if the new input doesn't improve the predicted output more than the penalty term, it'll set the weight of the input to zero.

Here's the same video creator explaining Lasso in a 7-minute video.

In the Elastic-Net version of linear regression, you combine the two penalization terms in some fractional amount (where the two fractions add up to 100%, so for example you can have 30% of the LASSO penalization and 70% Ridge).

Why does all of this help with the feature selection problem? Because the penalty given to each weight will only be outweighed by the benefit of the weight if that feature helps minimize the error for many points in the data. If there are just a few outlier points whose error might be minimized by having, say, a cubic term in the model, while other points are no more accurate when including that term, that will not be enough to overcome the penalty of having a non-zero weight for that feature, and the model will end up dropping the term.

Validation

In our look into linear regression, when trying to understand our models and whether or not they worked, we used two methods:

- We compared our model's learned parameters to the parameters we had used when generating the data.

- We looked at the predictions for a few data points.

Underfitting and Overfitting

There are two main things we are concerned about when trying to decide if we have a well-trained model.

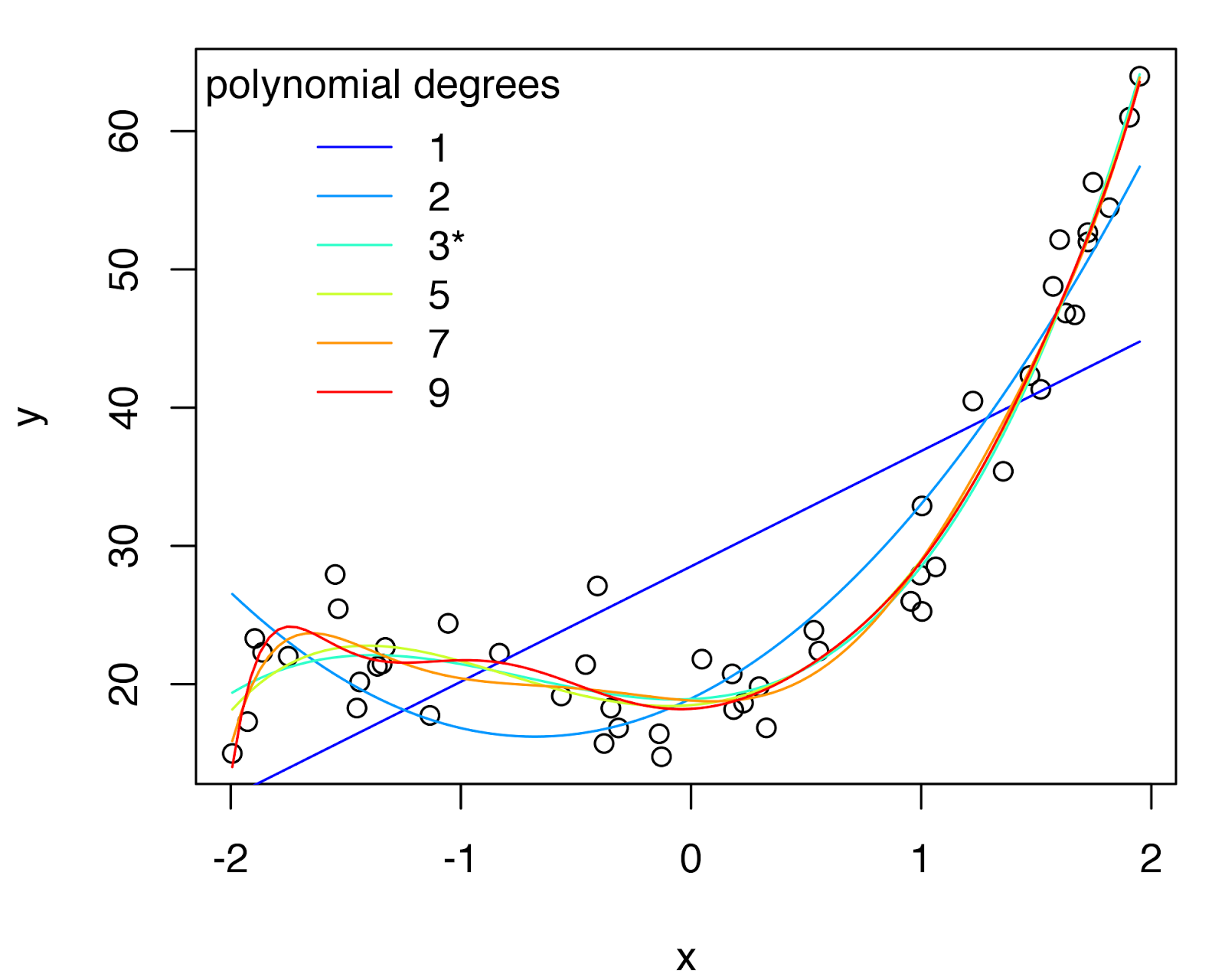

Underfitting means we didn't learn much about our data and cannot predict or describe it well. Maybe our model just doesn't match (e.g. we used a linear model and the relationship between the input and output is quadratic). Maybe we didn't have much data to learn from. Maybe our optimization algorithm failed in some way. Maybe we stopped the training process too early (an issue that can arise with some of the algorithms we'll look at later).

Overfitting happens when we can predict our training data really, really well, but if we try to predict any new observations, we don't do so well. Basically, we learned how to copy our data rather than learning some general rules. We would say that our model doesn't generalize well. This is like passing a multiple-choice test by memorizing the answers "a, a, c, d, d, a, b" instead of knowing why those were the right answers.

This image shows different possible models that have been trained on the points in the graph. The straight line is an underfit -- this does not look like a simple linear relationship. However, some of the higher-degree lines look like overfit -- the points at the bottom left probably don't actually suggest that the model needs to dip down there. A really severe overfit would draw a curve that passed through every single point.

The Bias-Variance Tradeoff

Image from Scott Formann-Roe's website

The underfitting/overfitting dilemma relates to something called the Bias-Variance tradeoff. If a model is heavily biased, that means it makes predictions that tend to be far away from the underlying "true" distribution of the data. In this sense, we can say that it has "underfit" the data.

However, if we build a model that "overfits" to the data at hand, it may generalize poorly to new data we example. We might say that our model has high variance because the exact model parameters will differ greatly depending on the training data at hand.

Read more about the Bias-Variance tradeoff.

Creating a Test Set

In order to figure out whether or not our model is overfitting, we need to use some new data that it didn't get to see when it trained. If it predicts this new data well, then we can be more confident that what it learned it training does in fact apply more broadly. We can this new data the test set.

In some cases, new data continues to come in, giving us a natural set with which to test how our model handles new data that it didn't train on. In many other cases, however, we have just one (possibly small) data set. What can we do to test for overfitting?

One answer is holdout, in which you randomly divide your data into training data and testing data. How big should each part be? Unfortunately, this is not an easy question to answer. It depends on the data you have, the model you're using, your cost function, how you're optimizing, and so on. In general, the more complex your model is, the more data you need -- in both training and testing. However, regularization can help a model perform better even with a small training set.

If you do not have enough data to make good sized training and test sets, you can try using cross validation. In this case, you split your data into several equal parts, enough parts that if you left just one of them out, you'd have enough data left over to train your model. Now, for each part, train the model on all of the data not in that part and test the model on that part. This can give you a big enough "test set" overall that you can make a decisions about which model to use (and then perhaps train it on your entire set).

See this lecture for more information about creating test sets for validation.

Validation Metrics

Once you have created a test set, you'll want some way to score how your model did. What measurement to use depends on what type of problem you're working on.

Validation Exercises

- Add validation code to one of the linear regression or classification notebooks. Assess the performance of the model. What are the best and worst possible scores it could get? Why do you think the model is getting the score you're seeing? How do the scores between the training and testing sets compare?

- Answer the following questions:

- Thinking about the R^2 metric used for evaluating regression, answer the following questions:

- What is the highest possible score you could get?

- If your model simply predicted the average value of the training set no matter what the input was, what score would you get on a test set whose average matched that of the training set?

- What is the lowest score that you can get?

- When using accuracy to measure your model's performance on a classification problem:

- What is the best possible score you could get?

- If your model always predicted the same class no matter what the input, what score would you get on a test set where 85% of the items were in that class?

- What is the worst possible score you can get on a dataset that only has two classes?

- A model gets a recall score of 0 for class A on a test set with classes A, B, and C. If you take one of the test items that is in class A and have this model predict what class it is, what will it predict?

- A model gets a precision score of 1 for class A on a test set with classes A, B, and C. If you take one of the test items that is in class A and have your model predict what class it is, what will it predict?

- If a model with classes A and B has an AUC score of 1 and you give it an item from the test set that is in class A, what class will it predict and what probability will it give for that class?

- If a model with classes A and B has an AUC score of 0 and you give it an item from the test set that is in class A, what class will it predict and what probability will it give for that class?

- Thinking about the R^2 metric used for evaluating regression, answer the following questions:

Regression Validation

The two most common validation metrics for regression problems are \(R^2\) and RMSE.

\(R^2\)

The equation for \(R^2\) is

$$ 1 - \frac{sum( (y_{pred} - y_{truth})^2)}{sum( (averageY - y_{truth})^2 )} $$

That is, the numerator is our usual sum of squared errors, and the denominator is the sum of squares of the difference between each point and the average y value. (See here for more information.) An \(R^2\) of 1 means the model perfectly predicts the output. \(R^2\) of 0 means the model is not any more predictive than just guessing the average output every time. A negative \(R^2\) means your model does worse than just using the average value.

However, as Anscombe's Quartet famously shows, you may have a high \(R^2\) even if you have severely mismodeled your data.

Root Mean Squared Error (RMSE)

The RMSE is the square root of the following equation:

$$ \frac{sum((y_{pred} - y_{truth})^2)}{numDatapoints} $$

This measurement represents the standard deviation of the residuals. Residuals are equal to the difference between your prediction and the actual value of what you're trying to predict.

The lower the RMSE of your model, the better it is.

You can also visually assess how well your model fit by plotting your residuals. If your regression model was a useful one, then the residuals should appear to be randomly distributed around the X-axis, and their average should be approximately zero.

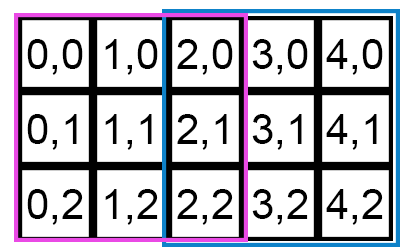

Classification

Classification methods are ones that help predict which group (AKA type or class) each data point (or set of observations) belongs to. They are supervised algorithms: you train your model by giving it a bunch of examples with a known group. (Sometimes, people talk about unsupervised classification, but in this course, we're referring to those methods as clustering, and we'll talk about them later.) Once the model is trained, you can give it a new example (again, a set of observations) and have the model predict which group this new item most likely belongs to.

For example, say I have a bunch of pictures of different species of penguins, and I want to know if the computer can learn to distinguish between them. I give my classification algorithm a bunch of these images and tell it which species is in each photo. After this training period, I now have a model that maps images to penguin species, and I can give it new images and see what species it thinks is in the image. In this case, my set of observations for each example are the pixel values of the image.

Binomial to Multinomial

Some of the classification algorithms are binomial, meaning they can only choose between two types. If you have multiple types to choose between, a multinomial problem, you can still use these algorithms with a one-vs-rest method. Basically, you train a different model for each class, one that will predict if an example belongs to that class or not to that class (that is, to one of the other classes). You run each example through all of your models to get the probability of it being in each class.

Using the penguin example again, I begin training my model for Adelie penguins. All images of Adelies are labeled with a 1, and images of any other species are labeled 0. I train a second model for Gentoo penguins. When training this model, I label all Gentoos 1 and everything else 0. After I have finished with each species, I can now predict the species of a new image. First, I run it through the Adelie model to get the probability it is an Adelie. Then, I run the Gentoo predictor, and so on. At the end, I can go with the class that was given the highest probability by its model as my answer.

Common Classification Algorithms

- Decision Trees: This method produces a model that slices up the data space in a way that is useful for classification, giving a flowchart on how to classify a new example. To do this, it looks at how well each feature can split up the data into the different classes, picks the best splits, and then does it again within each of the chosen subgroups. To predict the class of a new example, you follow along in the flow chart using the observations for that example.

Decision Trees are rarely used on their own, but instead as part of an ensemble like Random Forest. - Random Forest: This method trains many decision trees on randomly-chosen subsets of the data with randomly-chosen sets of features used for the decision points in the tree. To make a prediction, it combines the answers from all of the trees. Random forest is usually the best classification technique to use, unless you are working with images or text.

- Naive Bayes: This method is generally used to classify text (e.g. "This email is or is not spam"). The reason for the name is that it makes naive assumptions: By assuming that all of the observations are independent of one another, this method can efficiently compute probability distributions for each class that maximize the overall likelihood of the data. These assumptions are almost never true, but the classifications it predicts are often good enough.

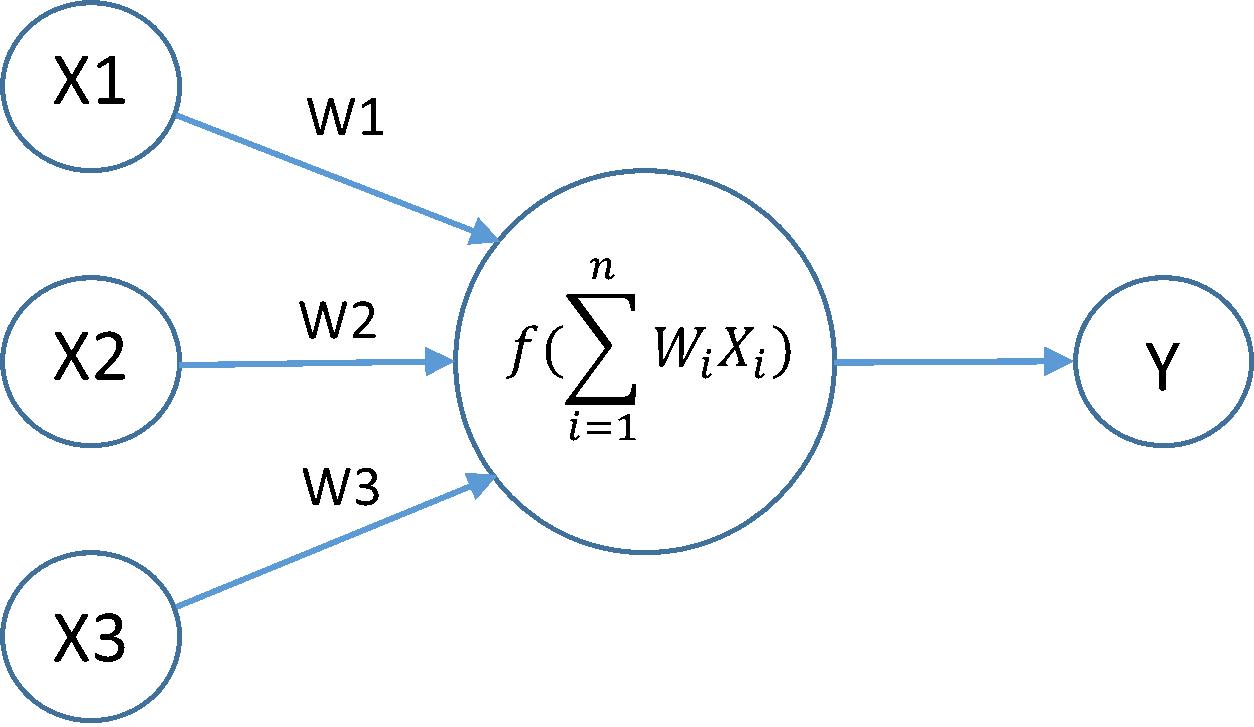

- Logistic Regression: This method finds weights for each observation in a datapoint to find a best fit through the data, like linear regression. Unlike in linear regression, it doesn't fit the weights and observations directly to the output, but uses the logit function so that it outputs probabilities.

- Support Vector Machines: This method is trying to find lines (or planes or hyperplanes) that group all examples of the same class together and separate examples of different classes from one another. Instead of looking at the distance from every point to the hyperplane (as logistic regression does), it is trying to maximize the distance between the hyperplanes and the points nearest to them. In order words, it is looking for areas of empty space between different groups. The distances between points are computed using kernel functions, which make computation more efficient. In order to find non-linear relationships, you can use a non-linear kernel function.

Decision Trees

Decision Trees allow you to classify data by creating a branching structure that divides up the data. By following the tree for a particular input, you can arrive at a decision for that input. Below is visualization of someone making a decision around giving a loan:

The above image is an example of a decision tree the loan approval officer of a bank might mentally go through when trying to figure out whether to approve a loan or not. The bank may want to try to minimize the risk of giving a loan to someone who won't pay it back (this is them trying to minimize their "error function").

Of course, minimizing error does not always make our models fair or ethical. Basing our predictions on data from the past makes intuitive sense, but it can cause problems, because the future does not always look like the past and many times we don't want to replicate the mistakes of the past. We'll talk more about these ethical issues later.

How do you create a Decision Tree model?

Imagine you're given a large dataset of people and whether they're vampires or not. You want to build a machine learning model that is trained on this data that can best predict whether new people are vampires or not.

Say your dataset has the following 3 features that you can use as inputs to train your model:

- How long it takes the person until their skin starts burning in the sun

- How many cloves of garlic they can eat before they have to stop

- Whether their name is Dracula or not.

A Decision Tree model is essentially trained on your data by doing the following:

- Select the attribute whose values will best split the datapoints into their classes: for example, select the Name-Is-Dracula attribute because everyone named Dracula in this dataset is a vampire

- Split along that attribute: split the data into those for whom the data is Yes and No, or is less than or greater than some value

- For each of these buckets of data (called child nodes), repeat these three steps until a stopping condition is met. Examples of stopping conditions:

- There's too little data left to reasonably split

- All the data belong to one class (e.g., all the data in the child node are not-vampires)

- The tree has grown too large

Measuring the best split: Gini or Entropy

How do you choose the best split? The sklearn library provides two methods that you can choose between to find the best split: Gini (also known as Impurity) and Entropy (also known as Information Gain) that you can give as inputs to a Decision Tree model. Both are a measurement of how well a particular split was able to separate the classes.

The worst split for both Gini and Entropy are ones that split the two nodes such that classes are evenly divided between the two nodes (that is, both nodes contain 50% of Class A and 50% of Class B).

How do you choose between Gini and Entropy? Great question. There's no hard and fast rule. The general consensus is that the choice of the particular splitting function doesn't really impact the split of the data.

To learn more about the difference between these, and other, splitting criteria, you can use resources such as:

- https://www.slideshare.net/marinasantini1/lecture-4-decision-trees-2-entropy-information-gain-gain-ratio-55241087

- https://sebastianraschka.com/faq/docs/decisiontree-error-vs-entropy.html

You can also take a look at this detailed tutorial on implementing decision trees.

Exercises

See our Decision Tree Jupyter Notebook that analyses the iris dataset that is included in scikit-learn and has questions and suggested exercises.

Random Forest

The Random Forest (RF) is a commonly used classification technique. The reason it's called a Random Forest is because it builds large numbers of decision trees, where each tree is built using a random subset of the total data that you have.

The RF algorithm is what is known as an ensemble technique. The word "ensemble" refers to a group of items or people. You may have heard it used in the context of music, where a group of musicians who perform together are known as an ensemble. By having each musician perform independently, yet also together with the group, the music they create is superior to any of their own individual abilities. The same may be true of Random Forest. Its predictive power is superior to that of any particular tree in the forest because it "averages" across all of the trees. Read more about ensemble methods in sklearn's documentation (including a heavy emphasis on ensemble tree-based methods) and in this essay.

The image below illustrates how an ensemble technique may be a better fit than any particular algorithm:

(Credit: https://citizennet.com/blog/2012/11/10/random-forests-ensembles-and-performance-metrics/)

The grey lines are individual predictors, and the red line is the average of all the grey lines. You can see that the red is less squiggly than the others. It's more stable and less overfit to the data.

Random Forests perform so well that Microsoft uses them in their Kinect technology.

Algorithm Behind Random Forest

(Some material taken from https://citizennet.com/blog/2012/11/10/random-forests-ensembles-and-performance-metrics/)

- Choose how many decision trees you will build for your model.

- To build each tree, do the following:

- Sample N data points (where N is about 66% of the total number of data points) at random with replacement to create a subset of the data. This is your training data for this tree.

- Now, create the tree as you would normally create a Decision Tree, with one exception: at each node, choose m features at random from all the features (see below for how to choose m.) and find the best split from among those features only.

Regression: Minimizing sum of squared errors

In the case that you use RF for regression, the construction of the forest is the same with the exception of how you choose the splits. In the case of regression, the nodes are split now based upon how well the split minimizes the sum of squared errors (real values - predictions) for each split.

Choosing m

There are three slightly different systems:

- Random splitter selection: m=1

- Breiman's bagger: m= total number of features

- Random forest: m much smaller than the number of features. The creator of the RF algorithm, Leo Brieman suggests three possible values for m: ½√v, √v, and 2√v where v is the number of features.

The intuition behind choosing m is that a lower m reduces correlation between trees, but it also reduces the predictive power of each tree (higher bias, lower variance in predictions). A higher m increases correlation between trees but also the predictive power of each tree (lower bias, higher variance in predictions).

Why is this? Well if the trees are uncorrelated (e.g., low m), they are unlikely to have shared many features in common and variances in individual trees will be smoothed over by the other trees in the forest (e.g., a funky tree will be outvoted by its peers if its peers used different features). Similarly, a lower choice of m will lead to trees that are less predictive (higher bias), but the RF as a whole will have a lower variance.

Using Random Forest

- Regression: Run a sample down all trees, and simply average over the predictions of all trees.

- Classification: A sample is classified to be in the class that's the majority vote over all trees.

Upsides of Random Forest

- The Random Forest minimizes variance and is only as biased as a single decision tree. Bias is the amount that your algorithm is expected to be "off" of the true value by. Variance describes how "unstable" your predictions are. The bias of a RF is the same as the bias of a single tree. However, you can control variance by building many trees and averaging across them. This is also described as the balance between overfitting and underfitting.

- You get estimation of generalized error for free since each tree is built only on two thirds of the data. For each tree, you can take the one third that you didn't use and treat that as your "test set" to calculate error. Your estimate of generalization error is the average of all errors across all trees in your Random Forest.

- This algorithm is parallelizable, making it faster to create. That's because each tree can be built independently of other trees.

- It works the best on many types of classification problems (particularly ones that don't involve images or other highly structured data), outperforming K-NN, logistic regression, CART, neural networks, and SVMs.

- The algorithm is intrinsically multiclass/multinomial (unlike SVMs and binomial logistic regression).

- It can work with a lot of features since you only take a random subset of features. It doesn't seem to suffer from the "curse of dimensionality."

- It can work with features of different types (same with a decision tree, not so with SVM or logistic regression).

- You also get an intuitive sense of variable importance: randomly permute data for a particular feature, and looks to see how much generalized error increased.

- The RF is a non-parametric method, which means it doesn't assume a particular probability distribution. Logistic and linear regression make certain assumptions about the data (e.g., normally distributed errors).

Downsides of Random Forest

- The RF, just like a tree, interpolates between datapoints, which means it doesn't fit with outliers well. Since the RF just takes an average of the "nearest neighbors" of the new datapoint, if it isn't close to any of the neighbors RF will underestimate or overestimate it.

- The RF decision boundary is the superposition of all of the decision boundaries of each of its trees. The trees' decision boundaries are discrete, oblique decision boundaries, making RF's decision boundary non-continuous.

- It's less interpretable than a decision tree. You can't easily draw out a tree for another human being to interpret.

Variations of Random Forest

- AdaBoost: "Adaptive Boosting" The way that it works is you build a bunch of trees, except each tree is built "knowing" the error of the previous one. It "overweights" these errors so that the successive tree tries to fit to these errors more.

- Gradient Boosted Trees: Boosting is the general class of techniques used to modify learning algorithms. AdaBoost is one example of a boosting. Gradient Boosting is similar to AdaBoost except that rather than using weights to "update" future trees, future trees are created by finding the "gradient". You can learn more by watching this 11-min video or reading this essay and following some of the references it links to.

Suggested Exercise

Add code in our Decision Tree Jupyter Notebook to use Random Forest to perform the analysis, as suggested in the final exercise.

Binomial Logistic Regression

Inputs: numerical (like linear regression)

Output: a number between 0 and 1, inclusive (that is, a probability).

Supervised: To get an initial model, you need to give it sample input/output pairs, where each output would be either 0 or 1.

The motivation: You have sets of observations that fall into two different types. Given a new set of observations, you'd like to predict which type it is. Your model should output the probability that it is the first type. Probabilities need to fall in the range [0-1], but with linear regression, there is nothing that restricts the output. What happens when your model generates outputs that are far above 1 or below 0? Logistic regression uses a function called the logit that constrains the output between 0 and 1.

For example, you want to classify someone as being a vampire or not. You know their height, weight, how many seconds they can last in the sun before burning up and how they would rate the taste of garlic on a scale of 1-10. Your desired output is one of two things: "vampire" or "not vampire."

After you train your logistic regression model using the heights, weights, time in the sun, and garlic preferences of beings whose vampire status is known, it can predict the probability of a new person based on their characteristics (e.g., Harold is about 90% likely to be a vampire).

Using the model as a classifier: Once you have a trained logistic regression model, if you want it to predict which type an input is (vampire/not vampire), then you need to pick a threshold above which to classify someone as a vampire. For example, if you picked p = 0.5, then you could say that for all new people, if passing their data through the model yielded a probability > 0.5, you would be getting the holy water and garlic ready. If you wanted to err on the side of being prepared for vampires, you might drop the threshold, say to 0.25, so even if the model said it was less likely than not, you would still assume vampire if it was at least 25% probable..

Using the model to learn feature importance: Perhaps what you really want is to know how each of your inputs affects the chance that a sample is one category or another. Logistic regression can give you weights for each of your inputs, the numbers it is multiplying each input by within the logit function to get the output probabilities. This is what gives it the name regression: that it learns coefficients for each feature.

The math:

Just like in linear regression, we are looking to find the best fit for parameters that we would multiply by each input. That is, if we have four inputs we are looking at, we want to find a, b, c, d, and intercept that best fit the data for an equation

$$y = a*x_1 + b*x_2 + c*x_3 + d*x_4 + intercept$$

Below, we'll represent the right side of the above equation as "inputs*parameters" as a shorthand.

Since your output is a probability, it needs to satisfy two constraints:

- Your output always needs to be positive.

- Your positive output always needs to be between 0 and 1.

How do you ensure the first condition? You use the exponential function in constructing your regression equation. The output of the exponential function approaches zero as the inputs get smaller, but it never outputs below zero. Specifically, you could try

$$y= e^{inputs*parameters}$$

Now how do you also satisfy the second condition? You can use the above expression in a fraction, getting a probability with

Notice how as the exponential expression approaches zero, so does the whole fraction. As it grows toward infinity, both the numerator and denominator grow, so the overall fraction approaches one. You can see this in the shape of $$y = \frac{e^{x}}{1 + e^{x}}$$

By Qef (talk) - Created from scratch with gnuplot, Public Domain, Link

This ends up being your regression model. You can perform some algebra so that you have the "parameters * input" on one side of the equation, and you will end up with

$$log_2 (p/(1-p)) = inputs*parameters$$

The log2(p/(1-p)) is known as the "logistic function" or "inverse logit", which gives the technique its name.

Training: As with linear regression, we need to have a cost function that we are minimizing. In this case, it is more complicated than that of linear regression, but it is similar to minimizing the sum of the squares of the errors. For some of the gritty details, check out the logistic regression section in this lesson.

To learn more about logistic regression, and to see a lot of the math, check out the following resources:

Example Code

Take a look at this analysis which uses logistic regression to learn from a real dataset.

Suggested Exercises

These two notebooks contain questions and exercises.

- This Logistic Regression Jupyter Notebook uses scikit learn to fit a logistic regression model to generated data.

- This Logistic Regression Jupyter Notebook uses scikit learn to fit a logistic regression model to the iris dataset that is included with scikit-learn.

Naive Bayes

Imagine the following scenarios: You have a bunch of pieces of text. Maybe you have wikipedia pages and you want to know which categories they fall into. Or you have a bunch of emails and you want to know which ones are spam. You have a collection of documents and you want to know if any show evidence of people planning a crime. These are classification questions: we want to use the words in each document to decide which category it falls into.

When doing text classification, you can end up with a lot of features, if each word is a different feature. In our earlier examples, we were looking at datasets that had 2-4 different features (things like height, petal length, sepal width, and so on). As of January 1 2019, an estimate for the number of words in the English language was 1,052,010. That's a lot of potential features!

For these types of natural language processing problems, we want a technique that can very quickly train a model with a large number of features. Many of the other classification types take a lot more time as the number of features go up, but Naive Bayes scales linearly in the number of features. That is, if there are twice as many features, it takes twice as long, and not, say, four or eight times as long.

Training: Calculating Probabilities

For these questions, we can provide an answer if we have a good enough answer to the question "What is the probability that my document belongs in class C, given the contents X of the document?"

We express "What is the probability of C given X?" in mathematical shorthand as: \[ P(C | X) \]

This is called the posterior probability. Bayes Theorem is a famous theorem in statistics and probability. It tells us that the probability of C given X is equal to the probability of C times the probability of X given C, scaled by the probability of X, expressed as \[P(C|X) = \frac{P(C)P(X|C)}{P(X)} \]

\(P(C)\) is called the prior. It is the probability that a message will be in that class if you don't know anything about it, your prior information about the classes of messages before you started learning from your data. Commonly, you would use an uninformative prior, making it \(\frac{1}{n}\), where \(n\) is the number of classes, for every class. This would mean that your prior belief is that each class is equally likely for a document if you don't know what is in the document. If you feel your dataset is a very representative of everything you might see in the future, then you could instead use the fraction of documents that are in the class in the dataset (or perhaps a previous dataset), giving yourself a more informed prior. For example, if half of the documents were in class 1, and a quarter each in classes 2 and 3, then you would use \(\frac{1}{2}\) as the prior for class 1 and \(\frac{1}{4}\) for classes 2 and 3.

\(P(X | C)\) is called the likelihood. This is the probability that we would see the words X if the document was in class C. This is the hard part to compute. If we wanted to do it accurately, then we would be trying to compute a giant joint probability, where essentially we would need to figure out what the probability of a specific word is given the class of the document and every other word the document. Instead, we can make the naive (and almost certainly wrong) assumption that all of the words are independent of each other (we're saying that if machine is in the document, learning is no more or less likely to be!). Making this assumption means that we can just multiply each \(P(x | C)\) -- where \(x\) is a single word -- to get the overall \(P(X | C)\). \(P(x | C)\) might be estimated simply, perhaps as the number of documents in class \(C\) that contain word \(x\) over the number of documents in class \(C\) or as the number of times that word \(x\) appears in a document of class \(C\) over the total number of words in all documents of class \(C\), but usually we would use a measure that takes into account the length of documents and how often that word normally appears. We will talk about this and other ways to calculate \(P(x | C) \) when we cover dataset processing later in the semester.

\(P(X)\) is called the evidence. In general, in any document, how likely are you to see this set of words? Maybe it's quite likely to see a particular word (like the) in some class of documents, but if you're also likely to see that word in many classes, then it shouldn't increase the probability of any of those classes as much as it would if it were only seen mostly in one class. Again, this would take awhile to truly calculate, as you are essentially calculating \(P(X | C)\) for every \(C\). However, making the same naive assumption as above, it becomes fairly easy. Note: While this term is important for getting out a probability for \(P(C | X)\), you don't need it if all you want to know is what class the model thinks your word is in. You can just calculate the numerator for each class to see which one is the biggest. The sci-kit learn documentation warns that Naive Bayes is a decent classifier, but the probabilities are usually not very good, so it might not be worth it to do the full calculation.

Predicting

What we want to return is the most likely class of a particular document. We can use the equation above to calculate our estimate for the numerator of \(P(C | X)\) for each class \(C\), and then our answer is the class whose numerator is the highest. This is called maximum a posteriori (MAP) estimation.

More information

- http://scikit-learn.org/stable/modules/naive_bayes.html

- https://www.analyticsvidhya.com/blog/2015/09/naive-bayes-explained/

- https://www.cs.cmu.edu/~tom/mlbook/NBayesLogReg.pdf

Suggested Exercises

See our Naive Bayes Juypter Notebook that analyses the 20 newsgroups dataset that is included in scikit-learn and contains questions and exercises.

Support Vector Machines

Support vector machine, or SVMs, are a common classification method used in binary classification.

The goal of SVMs is to construct a linear decision boundary (which is often called a hyperplane) that separates two classes. However, the technique doesn't just pick any hyperplane that separates two classes of points. After all, there may be many potential hyperplanes that could cleanly separate your two classes. Examples of decision boundaries are below:

Source: http://www.med.nyu.edu/chibi/sites/default/files/chibi/Final.pdf

So the question is: of all possible hyperplanes, which is the best? The intuition behind SVMs is that they construct a hyperplane that has the largest distance (or "margin" or "gap") between the closest members of both classes. An image representing this idea is below:

Source: http://www.med.nyu.edu/chibi/sites/default/files/chibi/Final.pdf

The vectors describing the datapoints on the boundaries are called "support vectors", which give SVMs their name.

How do you optimize for finding the "best" hyperplane?

The equation describing a plane is \(w * x + b = 0\). The 'b' parameter moves the plane and w controls the "orientation" of the plane.

The distance between parallel hyperplanes is \(\frac{abs(b1 - b2)}{length(w)}\) where \(abs\) is the absolute value function. (For those of you who have seen some linear algebra before: \(length(w)\) is the L2 norm of the vector w.)

If you want to maximize the "gap" between different classes, you want to minimize \(length(w)\). You can do so by using techniques from a branch of mathematics called quadratic programming.

The Kernel Method

So here's an important question: what if your data can't be separated by a flat hyperplane? One solution you can try is to transform your data so that your data becomes separable once transformed. You do so by applying a kernel function (read more on Wikipedia) to your input data, transforming them so that the data is now linearly separable.

Source: http://www.med.nyu.edu/chibi/sites/default/files/chibi/Final.pdf

In this case, the final classification is given by whether the distance from the decision boundary is positive or negative.

Things to ponder:

- SVMs are used for binary classification problems in which you have two classes you're trying to separate. What are some ideas that you have about how you can use SVMs to separate multiple-classes?

- What is the output of SVMs, and how does that differ from Logistic Regression? What are the advantage and disadvantage of using the SVM output to classify points vs. Logistic Regression?

Additional Resources:

- An introduction to SVMs (with quite a bit of math)

- Introduction with illustrations and code

- Explanation of the name "SVM"

- Lecture slides -- but ignore the title of the talk; this is not a simple concept

- Some example code in scikit-learn that analyses the iris dataset

Classification Validation

It is always a good idea to validate your model, as we have discussed.

Accuracy

For classification, you can use a simple measure of what ratio of test items were placed into the correct category over the total number of test items. This is called accuracy. However, this hides a lot of potentially important details about how your model is doing. This can be particularly bad if you have far more examples of some classes than others.

Precision, Recall, and F-Measure

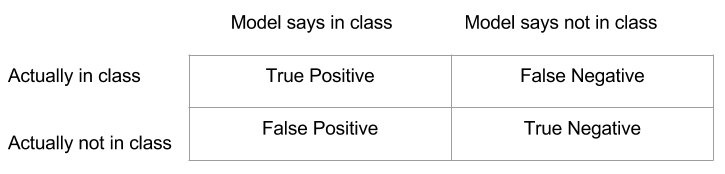

Another option is to look at each class individually and see how well the model did in different aspects. For this, for each class, we put each test data point into one of four categories.

- true positive: the model correctly predicted this point was in this class

- false positive: the model predicted this point was in this class, but it actually wasn't

- true negative: the model correctly predicted this point was not in this class

- false negative: the model predicted this point wasn't in this class, but it actually was

Precision is looking to see how often our guesses about points being in this class were right: \(\frac{TP}{TP + FP}\)

Recall (or True Positive Rate or Sensitivity) is looking to see how what fraction of points that were actually in this class were correctly identified: \(\frac{TP}{TP + FN}\)

F-measure combines precision and recall, using the harmonic mean: \(2 * \frac{P * R}{P + R}\)

The ROC Curve and AUC

Another popular metric used in a lot of academic papers published on classification techniques is the Receiver Operating Characteristic curve (AKA ROC curve).

This plots the False Positive Rate on the x-axis and the True Positive Rate on the y-axis.

- The True Positive Rate is the same as described above for Recall (they mean the same thing): \(\frac{TP}{TP + FN}\).

- The False Positive Rate is \(\frac{FP}{FP + TN}\)

The way the curve is created is by changing the values of the threshold probability at which you classify something as belong to the "positive class". If I am creating a model to give me the probability that it is going to rain today, I could be really cautious and carry an umbrella if the model says there is even a 10% chance of rain or I could live life on the edge and only bring my umbrella if the model was 99% sure it would rain. What threshold I pick may have a big impact on how often I am running through the rain or lugging around an unnecessary umbrella, depending on how confident and correct the model is.

(Source: https://www.medcalc.org/manual/roc-curves.php)

The area under the ROC curve is known as the AUC. It is mathematically equivalent to the probability that, if given two random samples from your dataset, your model will assign a higher probability of belonging to the positive class to the actual positive instance. This is known as the discrimination. The better your model is, the better it can discriminate between positive instances and negative ones.

The dotted line in the image above would be a random classifier. That is, it would be as if you built a classifier that just "flipped a coin" when it was deciding which of two samples was more likely to be in the positive class. You want to do better than random so try to make sure your models are above this dotted line!

If you'd like a video explanation of ROC and the area under the curve, this video does a fine job of detailing things.

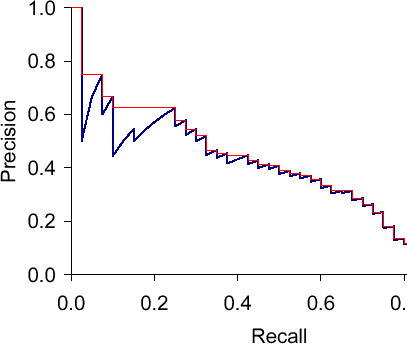

The Precision-Recall Curve

The precision-recall curve is another commonly used curve that describes how good a model is.

It plots precision on the Y-axis and recall (also known as true positive rate and sensitivity) on the X-axis.

Perplexity

Perplexity is sometimes used in natural language processing problems rather than some of the other classification validation measures. It uses the concept of entropy (in the information theory sense) to measure how many bits of information would be needed on average to encode an item from your test set. The fewer bits needed, the better, because it implies your model is less confused about how to represent this information. The perplexity of a model on your test set is

$$ perplexity = 2^{\frac{-1}{n}\sum_{i=0}^n\log_{2}(p_i ) }$$ where \(n\) is the number of test points in your data and \(p_i\) is the probability your model output of the \(i^{th}\) point being in class 1.

For instance, if you have a classification problem, for each test point, you would see what probability \(p_i\) your model gives for the correct class (and only the correct class) for that \(i^{th}\) point and take \(log_{2}(p_i)\). Add all of these up, take the average, and raise 2 to that average.

See Wikipedia and this blog post for more information.

Other Classification Metrics

You can see a page Carl created for other measurements of binary classification metrics: http://carlshan.github.io/

Ethical Issues

There are a number of challenging and nuanced ethical problems that relate to machine learning and artificial intelligence. Below, we lay out some of the topics that concern researchers and others about ways in which machine learning, deep learning, and massive amounts of data collection currently affect our world and might do so in the future.

- Privacy

- Bias

- Abuse and Misuse

- Model Explainability

- Responsibility

- Automation of Labor

- Existential Risks

Organizations Focused on AI Ethics

Other Ethics Resources

The list of topics above is not exhaustive nor is it the only way to group these ideas. Here are some other treatments of this topic:

- Deep Mind

- MIT Ethics course with a syllabus that includes lots of links to other resources

- Concrete Problems in AI Safety from Google

- Troubling Trends in Machine Learning Scholarship

- Fairness, Acountability, and Transparency in Machine Learning

- Cathy O'Neil wrote a book about ethics issues in Machine Learning and Big Data (and we have a copy of this book for loan). She also frequently writes about these topics in Bloomberg:

Ethics Exercises

- Choose one of the ethics topics, read through the notes, and summarize what you learned to a classmate.

- Find another example in the news of an ethical issue involving machine learning.

Privacy

The success of machine learning techniques relies upon having large repositories of data collected. For certain types of problems, companies or researchers may collect this data through logging the interactions we have with website and apps (such as which products we purchase on Amazon, what pages we like on Facebook, what sites we visit, what we search for, and so on).

Repositories of data may be collected without our explicit consent. Even when we do give consent (such as when we agree to the Terms and Services without actually reading it), our data eventually be used for purposes that are not surfaced to us.

Many people are unhappy with the possibility that their information may be used by companies or governments to monitor, label or target them based upon their behavior.

Examples and readings:

- This Washington Post article from October, 2016 explains worries about China’s proposed state plan to create a “social credit system”.

- Target apparently realized a young girl was pregnant and sent her advertising for baby supplies before her family knew.

- Scientists were able to identify many users based on (supposed-to-be) anonymous Netflix viewing data

- Car insurance companies use information from other sources to group you with similar people (by occupation, credit score, even Facebook posts.) and set your rate based on what they have done rather than just on what you have done

- Employees might be tempted to misuse large troves of data for personal or unknown purposes

- The Darker Side of Machine Learning - Oct 26, 2016 TechCrunch

Bias

Because in machine learning we are training models by example, we have to be careful about using examples that reflect the bias of our current world. The examples below are perhaps some of the most contentious or troublesome, but bias can also come in much more mundane forms (like finding sheep and giraffes where there are none). Bias can also creep in given the way we describe our goals, if the most efficient way to achieve them is not actually something we wanted. We always want to think carefully about every step of the design of our model so that we are not having the model learn things we never intended.

Gender Bias

Google researchers trained a machine learning system with years worth of news stories, representing the words they contained as vectors (series of numbers that represent a coordinate in some high-dimensional space). In this form you could perform arithmetic on these word vectors. For example, you could subtract “Man” from the vector that describes “King” and add “Woman” and get back “Queen” as a result. Very cool! However, if you tried to subtract “Man” from “Software Engineer” and add “Woman” you got “Homemaker”. This may reflect some aspects of our current society, but we did not want to train our system to believe this!

Another study showed that image-recognition models were learning sexist ideas through photos, and seemed to actually be developing a stronger bias than was in the underlying data.

The data that we use to train our algorithms are not inherently unbiased nor are the researchers who train and validate them; as a result, the systematic inequities or skews that persist in our society may show up in our learned models.

Racial and Socioeconomic Bias

"Like all technologies before it, artificial intelligence will reflect the values of its creators. So inclusivity matters — from who designs it to who sits on the company boards and which ethical perspectives are included. Otherwise, we risk constructing machine intelligence that mirrors a narrow and privileged vision of society, with its old, familiar biases and stereotypes." -- Microsoft researcher Kate Crawford See full article

One scrutinized machine learning system that may contain wide-reaching racial and socioeconomic bias is in that of predictive policing. Predictive policing refers to a class of software that claims to be able to use machine learning to predict crimes before they happen. A report that surveyed some of largest predictive policing software noted a few ways that predictive policing can have negative unintended consequences. They wrote how there can be a ratchet effect with these issues: due to bias stemming from which crimes are reported and investigated, the distortion may get worse if unrecognized, because “law enforcement departments rely on the evidence of last year’s correctional traces—arrest or conviction rates—in order to set next year’s [enforcement] targets.”

Propublica published an investigation into a similar risk assessment algorithms, finding that they were racially biased. The response from the company that developed the algorithm and Propublica's response to their response illustrate how different validation metrics can lead to different conclusions about whether there is or is not bias. Deciding how to be as fair as possible in a society that is currently unfair is not always straightforward.

A study tested various facial classification systems and found that they misgendered black people 20-30 times more often than white people.

If humans responding to resumes are racially or otherwise biased, then training a model based to pick out resumes based on ones that were previously picked out will reflect that same problem. Another study of bias in resumes illustrates sensitivity to selection of training data. This study found no racial bias in resume responses, which might indicate improvements in this area. However, the researchers write "unlike in the Bertrand and Mullainathan study, we do not use distinctly African American-sounding first names because researchers have indicated concern that these names could be interpreted by employers as being associated with relatively low socioeconomic status". Instead they used last names of "Washington" and "Jefferson" which are apparently much more common among black families than white ones. They did not appear to have tested whether or not the typical resume reader would actually associate those last names with a particular racial group.

In the spring of 2020, there was a lot of discussion about the PULSE model that creates high-resolution images from low-resolution images. The model tended to create images of people who looked white when given low-resolution images of people of color. The ensuing argument was around whether this racial bias could be solely or mostly attributed to a biased dataset, a common claim that oversimplifies the problems with many models. Many people recommended a tutorial by researchers Timnit Gebru and Emily Denton for a good explanation of the broader issues.

Political Bias

If machines are tasked with finding things you like, you may end up only ever seeing things that conform to your prior biases. Worse, if models are training to optimize for articles getting shared, then it is mostly sensationalist news that is more likely to be wrong that will be promoted. See for instance experiments with people seeing the other side of Facebook. In this study, some were swayed, but many felt their beliefs were reinforced, perhaps because of the tenor of the types of things likely to be shown.

Strategies for Fixing Bias

There have been a number of papers that have proposed different strategies for combatting bias in machine learning models. This article summarizes many of these suggestions, and also links to the underlying research. Also see the list of organizations in the intro section.

Abuse and Misuse

Throughout the history of our species, people have invented a large variety of useful tools that have bettered our lives. But with each tool, from the spear to the computer, there comes the potential for abuse or misuse.

Some researchers demonstrated ways to “trick” machine learning algorithms to label images as gibbons. By inserting some “adversarial noise” it’s possible to perturb the algorithms to assign an incorrect label to an image.

This is a gibbon.

This could potentially be used to deliberately harm others. For instance, might it be possible to somehow alter the clothing of a person so that self-driving cars do not identify them as a pedestrian? This is just one example of intentional misuse of technologies. How do we build safeguards around machine learning technologies that make it harder for them to be abused?

What are examples of models that we should be most worried about attackers tricking? There's a variety of issues that may come up due to how an organization uses, abuses or misuses the data and algorithms in machine learning.

Google put out a list of principles they intend to follow in building their software. (See reading below). Axon, a US company that develops weapons products for law enforcement and civilians (including tasers) arranged an AI Ethics board (link below).

Readings

- Axon AI Ethics Board

- Google's AI Principles and concerns about Google's AI Principles

- Artificial Intelligence Is Ripe for Abuse, Tech Researcher Warns - The Guardian

- Microsoft's Failure Modes in Machine Learning

Model Explainability

Even simple models can be notoriously hard to interpret.

For instance, in this altered version of our very simple "plant A" vs. "plant B" logistic regression, negative weights might not always mean that an increase in that feature makes the item less likely to be in the target class. It could just mean that the other features were much more important predictors. If we use the wrong model (as we saw when we try to fit a linear model to a parabolic dataset in the linear regression exercise), the weights might be entirely meaningless.

This problem only gets worse as the models get more complicated and less well understood, like the neural networks we'll be looking at soon. If a machine learning model decides that you shouldn't get a job, that you shouldn't get a loan, that you are a bad teacher, or that you are a terrorist, how do you argue with that? There might be no one thing that you can point to for where it went wrong. You may not be very comforted to hear that the model predicts these things quite well, and that you might just be in the small percentage of misclassifications. This is only made more complicated by the fact that these models are often not made public.

There is a lot of research into explaining what models are learning. Here are some examples.

- http://yosinski.com/deepvis

- https://homes.cs.washington.edu/~marcotcr/blog/lime/

- https://towardsdatascience.com/explainable-artificial-intelligence-part-3-hands-on-machine-learning-model-interpretation-e8ebe5afc608

- https://distill.pub/2018/building-blocks/

Responsibility

One question that touches upon all aspects of the above risks around machine learning is that of culpability: who is responsible for the negative outcomes that arise from these technologies? Is proper use of machine learning something that we can teach or assess?

If a self-driving car injures another person, who is liable? The engineering team that implemented and tested the algorithm? The self-driving car manufacturer? The academics who invented the algorithm? Everyone? No one? How do we even decide the right thing to do in bad situations?

As another example of how hard it can be to assign blame, “a Swiss art group created an automated shopping robot with the purpose of committing random Darknet purchases. The robot managed to purchase several items, including a Hungarian passport and some Ecstasy pills, before it was “arrested” by Swiss police. The aftermath resulted in no charges against the robot nor the artists behind the robot.” Source: Artificial Intelligence, Legal Responsibility and Civil Rights

In an article by Matthew Scherer published in the Harvard Law Review titled: Regulating Artificial Intelligence Systems: Risks, Challenges, Competencies, and Strategies (link to analysis), Scherer posed a number of different challenges that related to machine learning regulation:

- The Definitional Problem: As paraphrased in an analysis by John Danaher, “If we cannot adequately define what it is that we are regulating, then the construction of an effective regulatory system will be difficult. We cannot adequately define ‘artificial intelligence’. Therefore, the construction of an effective regulatory system for AI will be difficult.”

- The Foreseeability Problem: Traditional standards for legal liability describe that if some harm occurs, someone is liable for that harm if the harm was reasonably foreseeable. However, AI systems may act in ways that even the creators of the system may not foresee.

-

The Control Problem: This has two versions.

- Local Control Loss: Humans who were assigned legal responsibility over the AI no longer can hold control over it.

- Global Control Loss: No humans control the AI.

- The Discreetness Problem: “AI research and development could take place using infrastructures that are not readily visible to the regulators. The idea here is that an AI program could be assembled online, using equipment that is readily available to most people, and using small teams of programmers and developers that are located in different areas. Many regulatory institutions are designed to deal with largescale industrial manufacturers and energy producers. These entities required huge capital investments and were often highly visible; creating institutions than can deal with less visible operators could prove tricky.”

- The Diffuseness Problem: “This is related to the preceding problem. It is the problem that arises when AI systems are developed using teams of researchers that are organisationally, geographically, and perhaps more importantly, jurisdictionally separate. Thus, for example, I could compile an AI program using researchers located in America, Europe, Asia and Africa. We need not form any coherent, legally recognisable organisation, and we could take advantage of our jurisdictional diffusion to evade regulation.”

- The Discreteness Problem: “AI projects could leverage many discrete, pre-existing hardware and software components, some of which will be proprietary (so-called ‘off the shelf’ components). The effects of bringing all these components together may not be fully appreciated until after the fact.”

- The Opacity Problem: “The way in which AI systems work may be much more opaque than previous technologies. This could be ... because the systems are compiled from different components that are themselves subject to proprietary protection. Or it could be because the systems themselves are creative and autonomous, thus rendering them more difficult to reverse engineer. Again, this poses problems for regulators as there is a lack of clarity concerning the problems that may be posed by such systems and how those problems can be addressed.”

Who is responsible for unintended negative outcomes of machine learning algorithms?

Automation of Labor

One concern many have about the increased capacity of machines to mimic or perform human behavior is that of automation. Why should a company expend all its resources to hire a human, train them, pay benefits, deal with workplace all while knowing that their employee may quit at any moment? Why not simply replace a human with a machine that you don’t have to feed, cloth, or navigate awkward conversations with? Well, there’s a clear answer right now: much of the labor humans currently perform can’t yet be automated away. Over time, however, more tasks fall into the purview of machines, machines that may be controlled by a very small number of powerful people.

A great deal of automation of labor has already occurred in our history, such as during the Industrial Revolution. Economies adapted and people shifted into new industries. Will that continue to happen in the future, or is it different this time? How should retraining happen?

Even if there will be new jobs for people in the long run, that doesn't necessarily mean it won't be painful for many in the short term. One policy proposal that has collected academic and governmental interest at different times and has resurfaced again recently is that of Universal Basic Income (UBI), in which all citizens are given a living stipend independent of what work they do. As a result, they will be able to subsist on a livable wage even if there are few human jobs.

A great deal of jobs that are likely to be automated away are ones that are fundamentally procedural (such as parts of accounting, law, and perhaps soon software engineering) or require a lot of simple physical movement (driving, packing, shelving, delivering).

What jobs are least likely to be automated away in the near future?

Additional Readings

- Automation and Anxiety - The Economist

- Why Technology Favors Tyranny - Yuval Noah Harari, The Atlantic

Existential Risks

One of the big overarching concerns around machine learning is that it could eventually produce general artificial intelligence. There exist a plethora of movies/books hailing AI bringing about a Terminator-esque doomsday scenario. Counterposing these media, other movies and books proclaim that AI will bring about an utopian world.

It’s not obvious which outcome, if either, will occur in a world in which we have strong AI.

Due to the rapid progress of fields like Deep Learning, there is growing academic and industry work related around curtailing the existential risk of AI. For example, the Open Philanthropy Project recently published a report indicating that they’d be investing significant resources towards funding initiatives that reduced the chance of AI-caused existential risk. (Open Philanthropy Project report: link)

Below are some areas of concern that have been expressed.

- The Superintelligence problem: AI that is capable enough will become self-augmenting, making itself more and more powerful despite any safeguards we put around it.

-

The values embedding problem: We want an AI to be “good” but we don’t have a rigorous, clear and universally agreed definition of “good” that is easily expressible in code. This problem may be made worse by the following two ideas by philosopher Nick Bostrom:

- The orthogonality thesis: Intelligence and morality are orthogonal to each other. That is to say that the two attributes don’t have a strong correlation with one another. The implication of this is that an incredibly advanced AI cannot be trusted to naturally deduce how to be “good."

- Instrumental convergence thesis: Despite the above idea, Bostrom also argues that irrespective of any AI’s goals, there are a number of instrumental or intermediate goals that all AIs will pursue, since these intermediate goals are likely to be useful for a wide range of goals. Examples of instrumental goals include e.g., self-preservation and cognitive enhancement.

- Unintended consequences: You command your AI to build as many paperclips as it can. To maximize this goal, your AI turns the entire planet into scrap material that it can use to create as many paperclip factories as possible.

Additional Readings

- Benefits and Risk of AI - University of Oxford's Future of Humanity Institute

- Open Philanthropy’s research around AI: link

- The Value Learning Problem: link. Nate Sores of the Machine Intelligence Research Institute (MIRI)

- Landscape of current work on potential risks from advanced AI - Open Philanthropy Project

- The Ethics of Artificial Intelligence by Nick Bostrom and Eliezer Yudkowsky: link

- Superintelligence by Nick Bostrom

- Visualization of ideas from the book - LessWrong

Data Processing

When starting out with a new dataset, you want to take some time to understand it. For numerical columns, what does the distribution look like? Find the min, max, and average. Look at a histogram or scatter plot of each feature. For text columns, make a set to see what all the unique values are, and perhaps look at the frequency of each.

Oftentimes in real-world problems, the datasets we're analyzing are messy, inaccurate, incomplete or otherwise imperfect. For example, the textual datasets we're looking at may have typos. Datasets may have missing entries or features of different data types (e.g., text vs. numbers).

To effectively practice machine learning, we need to learn various ways we can handle these different scenarios. Here are some of the types of data situations you may encounter and some suggestions as to how to deal with them.

- Interval, Ordinal, or Categorical

- Text

- Null Values

- Transforming Numerical Values

- Feature Interactions

- Proxy Values

Data Processing Exercise

Pick a new dataset and figure out how to get it (or some subset of it) in a usable state, using at least one of the data processing techniques in the notes about dataset processing.

You can start with this Jupyter Notebook, if it is helpful for you.

Here are some places where you can find datasets:

Interval, Ordinal, Or Categorical

Data may come in many different flavors. Let's look at three of the main possibilities.

Interval

This is the numerical data we're most familiar with. Interval data lie along some continuum and the intervals between data are consistent. For example, salary is an interval variable because it can be represented meaningfully as a number and the difference between, say, $40,000 and $50,000 is the same amount of money as the difference between $70,000 and $80,000 (Though note that even here we need to be careful: $10,000 is a much bigger raise to someone making $40,000 than someone making $70,000. We can't always rely on things being "the same" in different contexts.)

Ordinal

Ordinal values also lie along a continuum, but unlike with interval data, the gaps between values of ordinal data may be inconsistent. For example, a survey question may allow you to rate chocolate ice cream on a scale of 1-5. However, the difference between a 1 and a 2 is not guaranteed to mean the same as the difference between a 4 and 5. Perhaps for you, both a 4 and 5 mean that you like the ice cream quite a bit. But a 1 means you absolutely hate chocolate and a 2 means you can tolerate it. In this case, while the data has some ordering, the gaps between the data don't hold consistent meaning. As a result, taking the average of survey results in which responses are clearly ordinal may lead to tricky results. In addition, Ordinal data is often discrete, meaning that intermediate values have no meaning -- no family actually has 2.3 children. In practice, ordinal data is often treated the same as interval, but it is something to keep in mind if that is a limitation of your model.

Categorical

Data that come in categories but have no intrinsic ordering are called categorical. For example, you may have data on a person's hair color. Perhaps a value of 1 means the hair is colored black. 2 means blonde. 3 means brunette and so on. There is no inherent meaning to these numbers. You can't take the average of your data and say that the average hair color is 1.5, so that means the average person's hair is between blonde and black.

Many forms of statistical analysis and machine learning models may assume that your data is implicitly of an interval form. Calculating averages, medians, standard deviations make the most sense when your data is on a continuous interval. So what happens if you have data that you suspect is ordinal or categorical?

For example, you may have data on a person that looks like:

| age | height (in) | Hair color | Likelihood of Voting |

| 29 | 70 | brunette | Low |

| 33 | 67 | black | High |

| 19 | 66 | blonde | Very high |

One common approach for categorical data is to use One-Hot Encoding. In this approach, you can turn a column of categorical into several columns of 0s and 1s. For example, if you have a column of a person's hair color and it can take on 4 values, you can use One-Hot Encoding to create 4 columns, where each column is a color and a 0 in that column means the hair is not that color and 1 means the hair is that color.